· llm · 1 min read

Generate AI images with ComfyUI and Flux 1.0

Guide to generate AI images with ComfyUI and Flux 1.0

How to run a local LLM models with ComfyUI

In general, found two easy ways to run a local LLM models

- ComfyUI

- Stable Diffusion web UI

We are going to use ComfyUI. It provides a lot more functionality than Stable Diffusion web UI. As well, stable diffusion web UI doesn’t support all the models that ComfyUI does.

With ComfyUI you can customise pretty much every step of the process, as it serves as Workflow editor

Installing ComfyUI

Link to the ComfyUI project: Github Repository & Installation

Windows

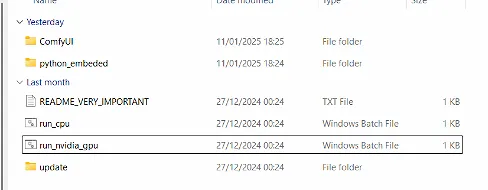

Dowload the zip file from the Github Repository

Etract the zip (for example with 7zip)

Put your model in the

ComfyUI\models\checkpointsfolder

If you put a new models, you need to restart the ComfyUI.

Prerequisites:

- Python 3.10

- Git

In your Windows terminal, run the following commands:

git clone https://github.com/comfyanonymous/ComfyUI.git

cd ComfyUI

pip install -r requirements.txt

In ComfyUI folder you extracted, find the Bat file run_nvidia_gpu.bat (for Nvidia GPU users) or a run_cpu.bat in case you need to run with CPU.